Inferring Conceptual Knowledge from Unstructured Student Writing

|

1 |

|

|

|

|

|

|

|

Vivienne L. Ming1,2 |

Norma C. Ming3,4 |

|

|

2 |

1Socos LLC |

3Nexus Research & Policy Center |

|

3 |

Berkeley, CA 94703 |

San Francisco, CA 94105 |

|

4 |

||

|

5 |

2Redwood Center for |

3Graduate School of Education |

|

6 |

||

|

7 |

Theoretical Neuroscience |

UC Berkeley |

8UC BerkeleyBerkeley, CA 94720

9 Effective instruction depends on formative assessment to discover and monitor student

10understanding [1]. By revealing what students already know and what they need to learn, it

11enables teachers to build on existing knowledge and provide appropriate scaffolding [2]. If

12such information is both timely and specific, it can serve as valuable feedback to teachers

13and students and improve achievement [3][4]. Yet incorporating and interpreting ongoing,

14meaningful assessment into the learning environment remains a challenge for many reasons

15[5], and testing is often intrusive, demanding that teachers interrupt their regular instruction

16to administer the test. Our proposed solution to these problems is to build a system which

17relies on the wealth of unstructured data that students generate from the learning activities

18 their teachers already use. Using machine intelligence to analyze large quantities of

19passively collected data can free up instructors’ time to focus on improving their instruction,

20informed by their own data as well as those of other teachers and students. Building an

21 assessment tool which they can invisibly layer atop their chosen instructional methods

22affords them both autonomy and information. Here we present a model for inferring domain-

23specific conceptual knowledge directly from unstructured student writing from online class

24 discussion forums. Using only student discussion data from two unrelated courses –

25undergraduate introduction to biology and economics for MBA – we applied a hierarchical

26Bayesian

model to infer both

27patterns across students. This model extends hierarchical latent Dirichlet allocation (hLDA)

28[6] for concept modeling with a

29patterns

across students. As a proof of concept, we predicted

30the same approach may be applied to many other assessments and adaptive interventions

31recommendation. The model produced significantly better predictions compared with other

32 topic modeling techniques from the very first week of instruction, and the accuracy

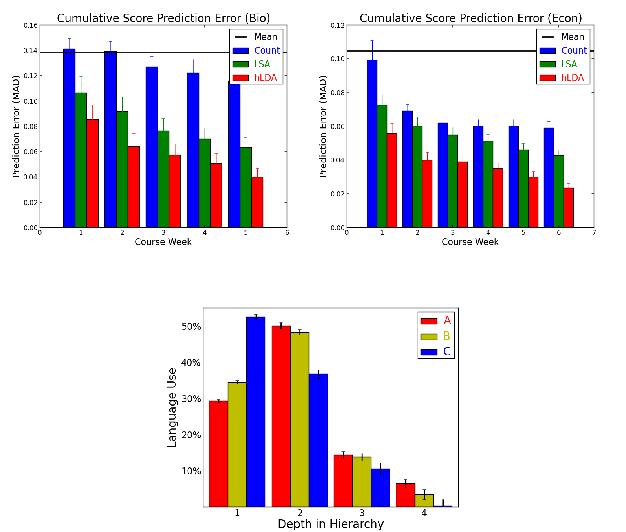

33improved with additional data collected over the duration of the course (Figure Figure).

34Furthermore, additional examination of the data also reveals that higher course grades are

35correlated with a slightly higher mean of the depth parameter in hLDA. Topics in the hLDA

36model are structured in a hierarchy learned from the data, with more specialized topics being

37represented deeper in the hierarchy than more general topics. Figure Figure depicts the

38percentage

of

39four depth levels specified in the hierarchy. As shown, most of the language used by students

40 who receive C’s resides at the topmost (most generic) level, while relatively greater

41percentages of the language used by students receiving A’s and B’s reside at deeper levels in

42the hierarchy. Finally, the conceptual layer showed consistent temporal patterns of concept

43expression within each course were strong predictors of student performance and allowed

|

44 |

easy clustering of students for potential targeted intervention. These results suggest the |

|

45 |

feasibility of mining student data to derive conceptual hierarchies and indicate that topic |